GPT-4 and Beyond: Evaluating Cognitive Skills of AI

Large language models aren’t human beings. Let’s cease assessing them as if they were.

When Taylor Webb experimented with GPT-3 in early 2022, he was amazed by the capabilities of OpenAI’s large language model. Trained merely to predict the next word in a text, GPT-3 managed to solve abstract problems resembling those found in IQ tests. Webb, a psychologist at the University of California, Los Angeles, who studies human and computer problem-solving approaches, had expected much less but was pleasantly surprised.

Webb usually designed neural networks with specific reasoning abilities added on, so GPT-3’s ability to learn such skills on its own was astonishing.

Recently, Webb and colleagues published a study in Nature detailing GPT-3’s capacity to pass various tests that measure analogical reasoning, a form of problem-solving involving analogies. In some of these tests, GPT-3 outperformed university students. Webb explained, “Analogical reasoning is crucial in human thinking. It’s a skill we expect any machine intelligence to demonstrate.”

These accomplishments are the latest in a series of impressive feats by large language models. When OpenAI introduced GPT-4 in March, they highlighted its success in a wide range of professional and academic assessments, including high school tests and even the bar exam. They even collaborated with Microsoft to show GPT-4’s ability to pass parts of the United States Medical Licensing Examination.

Various researchers also claim that large language models can excel in tests designed to evaluate specific human cognitive abilities, such as step-by-step reasoning and understanding the perspectives of others.

These outcomes have led to heightened expectations that these machines will soon replace jobs like teaching, medicine, journalism, and law. Geoffrey Hinton, a key figure in AI, has expressed concerns about GPT-4’s ability to string together thoughts, a feature he helped create.

However, a significant challenge arises: there’s little consensus on the actual meaning of these results. Some are amazed by what they consider human-like intelligence displayed by these models, while others remain unconvinced.

Natalie Shapira, a computer scientist at Bar-Ilan University in Israel, points out issues with current evaluation techniques for large language models, explaining that they often create a false impression of the models’ capabilities.

This has prompted various researchers—computer scientists, cognitive scientists, neuroscientists, and linguists—to call for more rigorous evaluation methods. Some argue that scoring machines using human tests is fundamentally flawed and should be abandoned.

Melanie Mitchell, an AI researcher, explains, “Since the inception of AI, machines have been subjected to human intelligence evaluations—such as IQ tests and the like. The crux of the matter has perpetually been the interpretation of such evaluations for machines. The implications for machines are not equivalent to those for humans.”

With the technology’s potential and concerns at an all-time high, it’s crucial to gain a clear understanding of what large language models are truly capable of.

Open to Interpretation

The main issues with evaluating large language models revolve around how we interpret the results.

Assessments designed for humans, like high school exams and IQ tests, assume a lot. When humans score well, we assume they possess the knowledge, understanding, or cognitive skills the test measures. But when a large language model does well on such tests, it’s unclear what’s actually being measured. Is it genuine understanding? A statistical trick? Simply memorization?

Laura Weidinger, a senior research scientist at Google DeepMind, says, “There’s a long history of developing methods to test the human mind. With large language models producing human-like text, it’s tempting to assume human psychology tests can evaluate them. But that’s not true—human psychology tests rely on many assumptions that may not apply to large language models.”

Webb acknowledges the complexity of these questions. He notes that despite GPT-3 outperforming undergraduates on certain tests, it struggled on others. For example, it failed an analogical reasoning test involving physical objects that children can easily solve.

In this test, Webb and colleagues presented GPT-3 with a story about a magical genie transferring jewels between two bottles. They then asked how to transfer gumballs from one bowl to another using objects like a posterboard and a cardboard tube. The story provided hints for solving the problem, but GPT-3’s proposed solutions were convoluted and mechanically unsound.

Webb explains, “These systems struggle with things involving real-world understanding, like basic physics or social interactions—things that come naturally to people.”

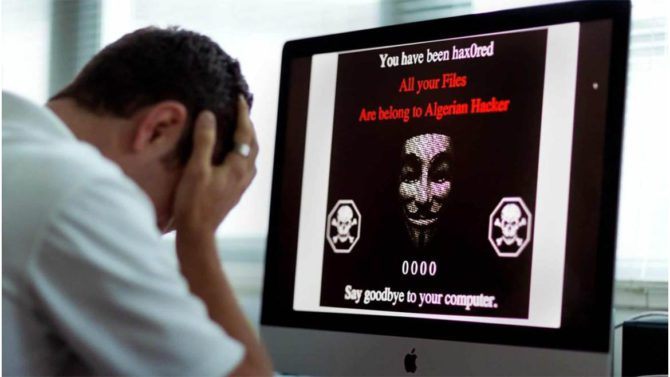

So how do we make sense of a machine that passes the bar exam but struggles with preschool-level tasks? Large language models like GPT-4 are trained on massive amounts of text from the internet, which includes books, blogs, reports, and social media. It’s possible that the model encountered many past exam papers during this process, leading it to learn how to complete answers.

Webb says, “Many of these tests—questions and answers—are available online. They’re likely in GPT-3’s and GPT-4’s training data, so it’s hard to draw clear conclusions.”

OpenAI claims to have checked that the tests given to GPT-4 didn’t overlap with its training data. They even used paywalled test questions for their collaboration with Microsoft to ensure GPT-4’s training data didn’t include them. But these precautions aren’t foolproof: GPT-4 might have encountered similar tests.

When Horace He tested GPT-4 using questions from a coding competition website, he found that it scored well on questions before 2021 but poorly on those after. Others have noted a similar drop in GPT-4’s test scores for material after 2021. This suggests that the model memorized information rather than truly understanding it.

To avoid this possibility, Webb created new types of tests from scratch. “We’re interested in these models’ ability to solve new types of problems,” he explains.

Webb and his colleagues adapted a test called Raven’s Progressive Matrices to assess analogical reasoning. These tests show a series of shapes and ask for the pattern, which is then applied to a new set of shapes. Instead of images, they used sequences of numbers to ensure the tests weren’t in the training data.

Mitchell appreciates Webb’s work but has reservations. She developed her own test called ConceptARC, which uses encoded sequences of shapes from a data set. In her experiments, GPT-4 scored worse than people on similar tests.

She also points out that encoding images into sequences of numbers makes the problem easier because it removes the visual aspect. “Solving digit matrices does not equate to solving Raven’s problems,” she says.

Fragile Tests

Large language models’ performance is fragile. Among humans, if someone does well on a test, we expect them to do well on similar tests. But with large language models, even a slight change to a test can drastically alter the score.

“AI evaluation hasn’t been done in a way that allows us to understand these models’ capabilities,” says Lucy Cheke, a psychologist at the University of Cambridge. “It’s reasonable to test a system’s performance on a specific task, but it’s not valid to make claims about broader abilities.”

For instance, a team of Microsoft researchers claimed in a paper to have found “sparks of artificial general intelligence” in GPT-4. They assessed the model with various tests, one of which involved stacking objects in a stable manner. GPT-4’s response was impressive, but when Mitchell tried a similar question, GPT-4’s answer was off-base.

She tested GPT-4’s ability to stack a toothpick, a bowl of pudding, a glass of water, and a marshmallow. GPT-4 suggested sticking the toothpick in the pudding and the marshmallow on the toothpick, then balancing the full glass of water on the marshmallow. The response was creative but impractical.

Stay Updated about the latest technological developments and reviews by following TechTalk, and connect with us on Twitter, Facebook, Google News, and Instagram. For our newest video content, subscribe to our YouTube channel.