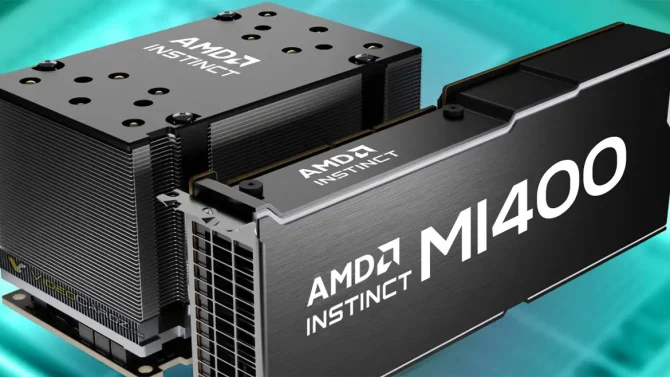

AMD Confirms Development of Next-Generation Instinct MI400 Series AI Accelerators

AMD has set its sights on a vigorous strategy within the AI industry, unveiling its plans for the forthcoming MI400 Instinct AI accelerators.

AMD’s Ambitious Pursuit of Excellence in the AI Landscape: Next-Gen Instinct MI400 Accelerators Revealed

AMD has formally affirmed its dedication to crafting the “MI400” Instinct series. This announcement hardly comes as a surprise, considering that Lenovo’s VP had already corroborated the presence of these next-generation accelerators on AMD’s agenda.

In a recent Q2 earnings call, CEO Lisa Su tantalizingly hinted at the future Instinct MI400 AI Accelerators, skillfully concealing specific details, thereby leaving us at the edge of anticipation. However, akin to the MI300 series, the MI400 accelerators are poised to be available in an array of configurations.

“To meticulously survey these workloads and the investments we are presently making, as well as those we intend to pursue with our subsequent MI400 series and beyond, is to unequivocally recognize the formidable and adept trajectory of our hardware roadmap. The discourse encompassing AMD has, to a large extent, centered around the roadmap for software, and we do discern a perceptible shift taking place in this domain. Dr. Lisa Su (AMD CEO)”

Dr. Su’s declaration underscores the fact that the Instinct lineup is abundantly equipped in the realm of hardware, boasting top-tier specifications. However, it is acknowledged that AMD encounters a deficit in software development, particularly concerning robust support for generative AI applications. While NVIDIA has taken the lead by introducing innovations such as “NVIDIA ACE” and “DLSDR”, AMD is charting a course to enhance its software landscape. We can thus anticipate significant transformations being ushered in by AMD, with the potential to elevate the Instinct platform to new heights.

As previously deliberated, alongside the imminent MI400 Instinct lineup, AMD has unveiled its intentions to create “pared-down” MI300 variants tailored for the Chinese market, an endeavor aligned with adherence to US trade policies. While exact specifications remain elusive, we anticipate that Team Red will adopt a strategy akin to NVIDIA’s approach with their “H800 and A800” GPUs.

While NVIDIA has predominantly capitalized on the AI “bonanza”, reaping substantial sales and meeting escalating demand, rivals such as Intel and AMD have made strides, albeit belatedly. Nonetheless, their offerings showcase superior performance and value, thus positioning them as formidable competitors.

AMD Radeon Instinct Accelerators:

| ACCELERATOR NAME | AMD INSTINCT MI400 | AMD INSTINCT MI300 | AMD INSTINCT MI250X | AMD INSTINCT MI250 | AMD INSTINCT MI210 | AMD INSTINCT MI100 | AMD RADEON INSTINCT MI60 | AMD RADEON INSTINCT MI50 | AMD RADEON INSTINCT MI25 | AMD RADEON INSTINCT MI8 | AMD RADEON INSTINCT MI6 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CPU Architecture | Zen 5 (Exascale APU) | Zen 4 (Exascale APU) | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| GPU Architecture | CDNA 4 | Aqua Vanjaram (CDNA 3) | Aldebaran (CDNA 2) | Aldebaran (CDNA 2) | Aldebaran (CDNA 2) | Arcturus (CDNA 1) | Vega 20 | Vega 20 | Vega 10 | Fiji XT | Polaris 10 |

| GPU Process Node | 4nm | 5nm+6nm | 6nm | 6nm | 6nm | 7nm FinFET | 7nm FinFET | 7nm FinFET | 14nm FinFET | 28nm | 14nm FinFET |

| GPU Chiplets | To Be Disclosed | 8 (MCM) | 2 (MCM) 1 (Per Die) | 2 (MCM) 1 (Per Die) | 2 (MCM) 1 (Per Die) | 1 (Monolithic) | 1 (Monolithic) | 1 (Monolithic) | 1 (Monolithic) | 1 (Monolithic) | |

| GPU Cores | To Be Determined | Up To 19,456 | 14,080 | 13,312 | 6656 | 7680 | 4096 | 3840 | 4096 | 4096 | 2304 |

| GPU Clock Speed | Upcoming | To Be Announced | 1700 MHz | 1700 MHz | 1700 MHz | 1500 MHz | 1800 MHz | 1725 MHz | 1500 MHz | 1000 MHz | 1237 MHz |

| FP16 Compute | Forthcoming | To Be Revealed | 383 TOPs | 362 TOPs | 181 TOPs | 185 TFLOPs | 29.5 TFLOPs | 26.5 TFLOPs | 24.6 TFLOPs | 8.2 TFLOPs | 5.7 TFLOPs |

| FP32 Compute | Upcoming | To Be Disclosed | 95.7 TFLOPs | 90.5 TFLOPs | 45.3 TFLOPs | 23.1 TFLOPs | 14.7 TFLOPs | 13.3 TFLOPs | 12.3 TFLOPs | 8.2 TFLOPs | 5.7 TFLOPs |

| FP64 Compute | Forthcoming | To Be Unveiled | 47.9 TFLOPs | 45.3 TFLOPs | 22.6 TFLOPs | 11.5 TFLOPs | 7.4 TFLOPs | 6.6 TFLOPs | 768 GFLOPs | 512 GFLOPs | 384 GFLOPs |

| VRAM | To Be Announced | 192 GB HBM3 | 128 GB HBM2e | 128 GB HBM2e | 64 GB HBM2e | 32 GB HBM2 | 32 GB HBM2 | 16 GB HBM2 | 16 GB HBM2 | 4 GB HBM1 | 16 GB GDDR5 |

| Memory Clock | To Be Revealed | 5.2 Gbps | 3.2 Gbps | 3.2 Gbps | 3.2 Gbps | 1200 MHz | 1000 MHz | 1000 MHz | 945 MHz | 500 MHz | 1750 MHz |

| Memory Bus | To Be Disclosed | 8192-bit | 8192-bit | 8192-bit | 4096-bit | 4096-bit bus | 4096-bit bus | 4096-bit bus | 2048-bit bus | 4096-bit bus | 256-bit bus |

| Memory Bandwidth | Forthcoming | 5.2 TB/s | 3.2 TB/s | 3.2 TB/s | 1.6 TB/s | 1.23 TB/s | 1 TB/s | 1 TB/s | 484 GB/s | 512 GB/s | 224 GB/s |

| Form Factor | To Be Unveiled | OAM | OAM | OAM | Dual Slot Card | Dual Slot, Full Length | Dual Slot, Full Length | Dual Slot, Full Length | Dual Slot, Full Length | Dual Slot, Half Length | Single Slot, Full Length |

| Cooling | To Be Determined | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling |

| TDP (Max) | Upcoming | 750W | 560W | 500W | 300W | 300W | 300W | 300W | 300W | 175W | 150W |